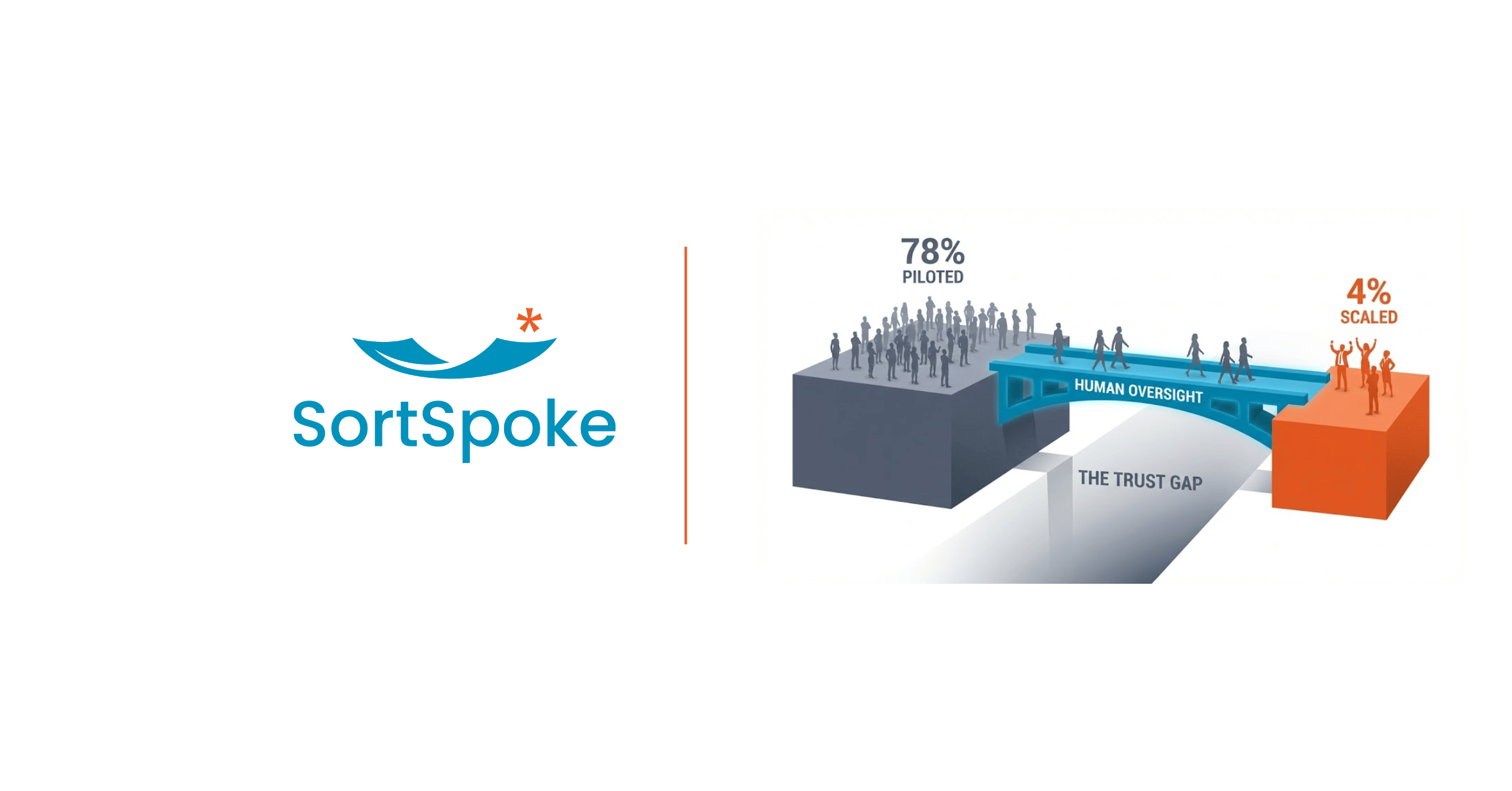

The Trust Gap: Why 78% of Insurance AI Pilots Never Scale

TL;DR

- The scale gap is real: 78% of P&C insurers have experimented with AI, but only 4% have scaled it meaningfully.

- Trust is the bottleneck: Trust in AI jumps from 16% to 60% when human oversight is added. Without trust, teams route around the system.

- Architecture matters: The difference between pilots that stall and systems that scale comes down to building human oversight in from day one.

Here's a number that should make every insurance technology leader uncomfortable: 78% of P&C insurers have experimented with AI in claims operations. Only 4% have scaled it meaningfully.

Let that sink in. Three-quarters of the industry tried. Less than one in twenty succeeded.

This isn't a capability gap. The AI technology largely works. It's a trust gap—and understanding that gap is the key to finally moving past pilot purgatory.

The Scale Gap

The pattern is consistent across carriers, regions, and use cases.

A promising pilot launches. The AI handles document extraction or claim triage with impressive accuracy in the test environment. Leadership sees the ROI projections. The team prepares for enterprise rollout.

And then it stalls.

The reasons vary. "The team isn't adopting it." "We found accuracy issues at scale." "There were compliance concerns." "It didn't integrate well with existing workflows." These are the explanations that show up in post-mortems.

But they're symptoms, not causes.

Research from Bain & Company shows that among insurers who haven't scaled AI in claims operations, 27% are actively pursuing comprehensive transformation while the rest remain stuck in limited deployment or waiting mode. The technology works in pilots. It fails at scale. And the industry keeps trying the same approach expecting different results.

The Trust Gap

The real problem shows up in a single statistic.

A 2025 survey by Wisedocs and PropertyCasualty360 asked insurance claims professionals about their trust in AI outputs. The results: only 16% trusted AI outputs when the AI operated alone.

But when human oversight was added to the process—when experts reviewed and validated AI decisions—trust jumped to 60%.

That's a 4x multiplier. Same AI. Same outputs. The only difference: human verification.

This trust gap explains almost everything about why pilots fail to scale.

In a controlled pilot environment, experts are closely involved. They're watching the outputs, catching errors, providing feedback. Trust builds because humans are in the loop, even if that loop isn't formally designed into the system.

When you try to scale, that informal oversight disappears. The AI is supposed to run autonomously. And that's when trust collapses.

Teams don't adopt systems they don't trust. They route around them. They check the AI's work manually anyway, eliminating the efficiency gains. Or they simply stop using the tool and revert to the old process.

Why Accuracy Concerns Kill Adoption

When the Wisedocs survey asked about barriers to AI adoption, the responses were revealing:

- 54% cited accuracy concerns — doubts about AI output correctness

- 49% cited compliance and regulatory risks — fear of breaching rules

- 44% cited integration challenges — difficulty fitting AI into workflows

- 44% cited lack of trust in AI output — low confidence from staff

The top two barriers—accuracy and trust—are really the same barrier viewed from different angles. And they're both solved by the same thing: human oversight.

Here's why this matters so much in insurance.

AI systems, especially large language models, make mistakes. Anyone who's worked with LLMs knows about "hallucinations"—confident outputs that are simply wrong. In a low-stakes environment, you catch and correct these errors. In insurance, they can mean denied claims, incorrect premiums, or compliance violations.

Without human verification, every AI error becomes a business problem. With human verification, errors are caught before they impact customers, regulators, or the bottom line.

The trust barrier isn't irrational skepticism. It's a reasonable response to the reality that AI systems aren't perfect—and in insurance, the consequences of imperfection are significant.

Why Full Automation Fails in Insurance

The full automation vision that drove early AI adoption in insurance was compelling: remove humans from routine processes, cut costs, achieve infinite scale.

The vision was also wrong.

Insurance work isn't routine in the way that manufacturing is routine. Insurance documents are messy—handwritten notes, inconsistent formats, missing information. Insurance decisions require judgment—weighing factors the model wasn't trained on, recognizing context from industry experience. Insurance stakes are high—a wrong decision affects real people with real claims.

This is the gap between "AI that can extract data from documents" and "AI that can underwrite." The extraction is tractable. The judgment isn't.

Full automation approaches tried to bridge this gap by adding more data, building bigger models, and tuning for higher accuracy. But even 99% accuracy means one error per hundred decisions—and at enterprise scale, that's thousands of errors per day.

The regulatory environment is tightening in response. Nineteen U.S. states have adopted NAIC guidelines for AI governance in insurance. California and Colorado now require human review for high-impact AI decisions. The trend is clear: regulators don't trust fully autonomous AI in insurance, and they're codifying that distrust into law.

The Scaling Paradox

Here's the counterintuitive truth that successful AI implementations have discovered: human oversight enables more automation, not less.

When teams trust the AI system, they use it. When they use it, adoption increases. When adoption increases, the AI gets more feedback and training data. When the AI improves, trust increases further.

This is a virtuous cycle—but it only starts when humans are in the loop from day one.

Without that trust foundation, you get the opposite cycle. Teams don't trust the system. They don't use it, or they check everything manually. The AI doesn't get feedback to improve. Trust erodes further. The pilot stalls.

The 4% that scaled didn't scale by removing humans from the process. They scaled by building human oversight into the architecture, then gradually expanding what the AI could handle as trust developed.

While most AI pilots stall at the proof-of-concept stage, SCM Insurance achieved an 80% reduction in claims processing time using human-in-the-loop AI. The difference? Human oversight was built into the architecture from day one—not bolted on as an afterthought.

What Actually Scales

The difference between pilots that stall and systems that scale comes down to architecture decisions made before the first line of code is written.

Systems designed for human verification from day one build trust into the workflow. AI outputs aren't treated as final decisions—they're treated as recommendations that humans review, validate, and occasionally correct. This isn't a limitation; it's the feature that enables adoption.

Confidence scores that route edge cases to experts let the AI handle what it handles well while flagging uncertainty for human review. The AI doesn't try to be perfect; it tries to be transparent about what it knows and doesn't know.

Audit trails that satisfy compliance ensure every decision can be explained and defended. Regulators don't just want accuracy—they want accountability. Human-in-the-loop architectures provide both.

Feedback loops that improve over time turn human corrections into training data. Every time an expert adjusts an AI output, the system gets smarter. The human oversight isn't just catching errors—it's teaching the AI to make fewer errors.

This approach explains why the best insurance AI keeps humans in the driver's seat. It's not AI that needs babysitting. It's AI that earns trust through transparency, accountability, and continuous improvement.

Moving Past Pilot Purgatory

If you've been stuck in the pattern—promising pilots that don't scale, leadership enthusiasm that fades to frustration, teams that resist adoption—the path forward isn't trying harder with the same approach.

The path forward is recognizing that the trust gap isn't a problem to overcome. It's a signal about what's needed for scale.

Your teams don't trust fully autonomous AI because they shouldn't. The technology isn't ready for unsupervised deployment in high-stakes insurance decisions, and your experienced professionals know it.

Human-in-the-loop isn't a compromise or a stepping stone. It's the architecture that works—the one that matches the reality of insurance work and builds the trust foundation that enables scale.

The 78% that experimented but didn't scale weren't using the wrong AI. They were using the right AI with the wrong architecture.

The 4% that succeeded figured out that trust is the gatekeeping variable, and human oversight is how you unlock it.

The Bottom Line

Pilot purgatory isn't inevitable. The difference between experiments that stall and systems that scale is trust—and trust requires human oversight built into the architecture, not bolted on as an afterthought.

If you've seen promising AI pilots fail to get traction, you're not alone. 78% of your peers have been there. But you don't have to stay there.

Ready to see what scales? Book a demo to see human-in-the-loop AI that's designed for enterprise adoption from day one.