TL;DR

- 95% of AI pilots fail because generic AI solutions can't handle insurance's unique complexity and regulatory demands

- Insurance-specific challenges require specialized AI architecture, not just better implementation processes

- Human-in-the-loop isn't just compliance—it's the technical approach that delivers superior accuracy for complex document processing

- The 5% that succeed use AI systems designed specifically for insurance workflows, document variety, and regulatory requirements

- Immediate value is possible when AI architecture matches industry complexity from Day 1

Imagine trying to use a consumer chatbot to underwrite a complex commercial property submission with 47 attachments across 12 different document formats, each requiring different risk assessment criteria based on state-specific regulations. The failure would be immediate and obvious. Yet this scenario perfectly illustrates why a staggering 95% of AI pilots in insurance fail to deliver financial impact—most organizations are trying to adapt generic AI solutions to an industry that demands specialized approaches.

A recent Carrier Management analysis by Matthew Maginley reveals that these failures occur because "teams launch one-off experiments without the fluency, structure, or blueprint to ever reach scale." But buried deeper in this analysis is a more fundamental truth: the insurance industry's unique complexity exposes the limitations of generic AI solutions that work perfectly fine in simpler business environments.

The 5% of AI implementations that do succeed share a critical characteristic—they use AI architecture specifically designed for insurance's document variety, regulatory complexity, and risk assessment demands rather than trying to force-fit generic solutions into specialized workflows. Understanding why insurance AI projects fail provides crucial insights for avoiding the same pitfalls.

Why Insurance Breaks Generic AI: The Complexity Problem

The Document Diversity Challenge

Insurance operations involve a level of document complexity that most AI systems simply weren't designed to handle. Consider what arrives in a typical underwriting queue: ACORD applications with handwritten notes, loss run spreadsheets with custom formatting, emails containing critical risk information, scanned PDFs of property surveys, and broker presentations mixing text, images, and financial data.

Generic AI solutions excel in controlled environments with standardized inputs. They fail catastrophically when faced with the endless variety of formats, layouts, and information presentation styles that characterize real insurance submissions. A system trained on clean digital forms struggles with handwritten margin notes that often contain the most critical underwriting information.

This challenge extends beyond simple document variety. Insurance submission triage requires understanding not just what's in documents, but how different document types relate to risk assessment and business priorities. Generic AI lacks this contextual intelligence.

The Regulatory Complexity Reality

Insurance operates under a regulatory framework that makes most other industries look simple. Every state has different rules, agents must prove recommendations serve customer interests, and reinsurance contracts constrain what can be sold and priced. This creates what Maginley describes as "a dynamic, challenging environment" where AI systems must understand not just documents but the legal and regulatory context that governs how information can be used.

Generic AI tools lack this regulatory intelligence. They can extract data but can't interpret whether that data meets state-specific requirements, complies with fair lending regulations, or aligns with reinsurance treaty terms. This gap between data extraction and regulatory-aware processing explains why technically successful pilots fail when deployed in production environments.

The NAIC Model Bulletin on AI Systems makes this challenge explicit, requiring explainable AI systems that can document decision-making processes for regulatory review. Generic AI solutions often operate as black boxes that cannot meet these transparency requirements.

The Risk Assessment Sophistication Gap

Underwriting requires contextual understanding that goes far beyond pattern recognition. A manufacturing company's liability exposure depends not just on revenue and employee count but on product types, distribution channels, quality control processes, and historical loss patterns. An AI system might successfully extract basic company information but miss the nuanced risk factors that determine appropriate coverage and pricing.

This sophistication gap becomes apparent when generic AI solutions encounter edge cases—which in insurance represent a significant portion of profitable business opportunities. The submissions that don't fit standard patterns often represent the highest-value prospects, but generic AI systems either reject them entirely or process them incorrectly.

Successful automated insurance underwriting requires understanding when to augment human expertise rather than attempting full automation for complex risk assessment tasks.

The Technical Architecture That Actually Works in Insurance

Why Human-in-the-Loop Is Technical Superiority, Not Just Compliance

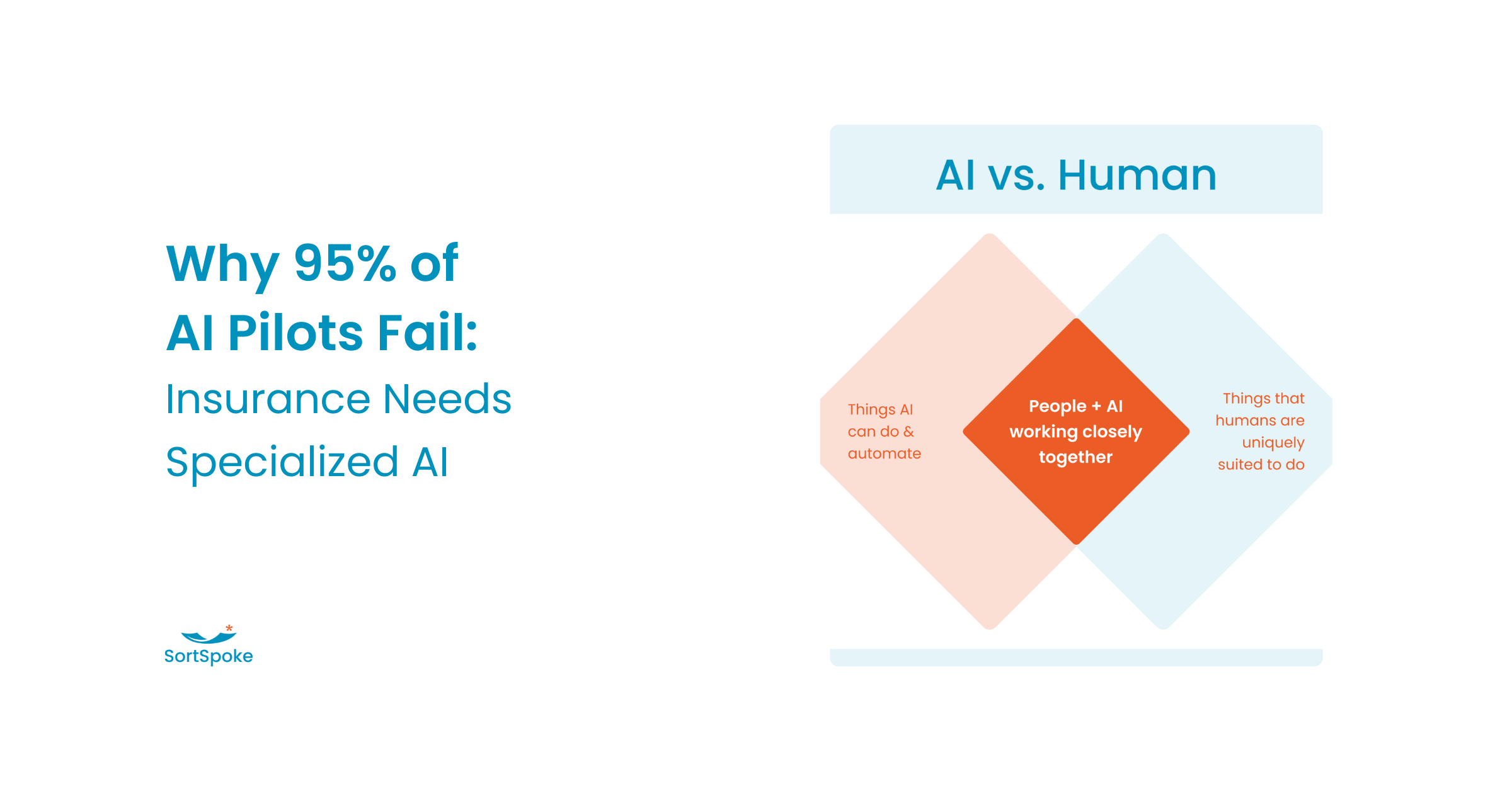

The most successful AI implementations in insurance use human-in-the-loop architecture not because regulators require it, but because it delivers superior results for complex document processing tasks. This approach combines machine speed with human judgment in a way that handles insurance's inherent variability and complexity.

When an AI system encounters an unusual document format or ambiguous risk information, human-in-the-loop architecture provides immediate escalation to experts who can resolve the issue and train the system for similar future cases. This creates a learning loop that continuously improves performance rather than requiring system redesign every time new document types or edge cases appear.

Generic AI systems lack this adaptive capability. They either process information incorrectly or fail entirely when encountering variations from their training data. Human-in-the-loop architecture transforms these failure points into learning opportunities that strengthen the system over time.

The facts about AI in underwriting reveal that successful implementations focus on augmentation rather than replacement, enabling underwriters to process 5X more submissions while maintaining control and expertise.

Insurance-Specific Intelligence vs. Generic Processing

The AI systems that succeed in insurance incorporate domain-specific intelligence from the ground up. They understand insurance terminology, recognize risk factors specific to different lines of business, and can interpret the relationships between different pieces of information within insurance contexts.

For example, a construction company's safety record isn't just about OSHA violations—it includes training programs, equipment maintenance protocols, subcontractor management practices, and loss control investments. Insurance-specific AI systems can identify and evaluate these interconnected risk factors, while generic systems treat them as isolated data points.

This domain intelligence extends to document understanding as well. Insurance-specific AI recognizes that a broker's email summary might contain more critical information than formal application forms, that loss run narratives often reveal patterns not apparent in numerical data, and that supporting documents frequently contain deal-breaking information buried in technical details.

Real-Time Learning That Matches Industry Dynamics

Insurance markets change rapidly—new risks emerge, regulations evolve, and competitive pressures shift pricing strategies. AI systems designed for insurance must adapt continuously rather than requiring periodic retraining cycles that work for more stable industries.

The most effective implementations include real-time learning capabilities that improve accuracy based on underwriter feedback and market changes. When underwriters correct AI assessments or override automated recommendations, those corrections immediately improve how similar cases are handled in the future.

This continuous adaptation capability is essential for maintaining accuracy in an industry where regulatory changes, market conditions, and risk landscapes evolve constantly. Generic AI systems that require formal retraining cycles can't keep pace with insurance's dynamic environment.

Effective AI technology best practices for insurance include building these learning mechanisms into the initial deployment rather than treating them as advanced features to add later.

The Fatal Flaws That Doom Generic AI in Insurance

Fatal Flaw #1: Template Thinking in a Template-Free World

Generic AI solutions often require structured inputs or standardized formats to function effectively. Insurance operations generate the opposite—endless variety in document formats, information presentation, and data organization. A system that works perfectly with clean ACORD forms fails completely when processing broker emails, handwritten notes, or custom spreadsheet formats.

As the Carrier Management analysis notes, success in a silo doesn't scale. A tool that processes one state's workers' compensation applications may fail completely when applied to commercial property submissions in a different state with different forms, requirements, and risk factors.

This challenge becomes particularly acute in underwriting submission triage, where systems must handle everything from structured applications to unstructured broker emails while maintaining accuracy and compliance.

Fatal Flaw #2: Trying to Replace Human Expertise Instead of Enhancing It

Generic AI solutions often attempt to do too much—not only extracting data but also interpreting its meaning and making contextual judgments that require human expertise. When an AI system tries to automatically classify a construction company's risk level based on revenue trends, or determine whether a manufacturer's quality control processes are adequate, it's attempting tasks that require deep industry knowledge and contextual understanding.

The problem isn't that AI can't extract the revenue numbers accurately—it's that generic systems try to make the interpretation decisions that should remain with underwriters. A spike in quarterly revenue might indicate business growth, seasonal variation, a one-time contract, or acquisition activity. Only an experienced underwriter can determine which interpretation applies and what it means for risk assessment.

This creates what Maginley identifies as weak business cases that get ignored by leadership. Generic AI systems promise to automate decision-making but deliver inconsistent results because they lack the contextual expertise that underwriters bring. When AI tries to replace human judgment rather than enhance it, the results are often technically impressive but practically unusable.

The disconnect between technical performance and business value explains why many technically successful pilots fail to gain executive support for scaling. Executives recognize that underwriting requires human expertise for complex risk assessment, regulatory compliance, and customer relationships. AI systems that attempt to eliminate this expertise create more problems than they solve, leading to failed implementations regardless of their technical capabilities.

The superior approach focuses AI on what it does best—accurate, fast data extraction from complex documents—while preserving human expertise for what humans do best: interpreting that data within the context of risk assessment, market conditions, and regulatory requirements. This is why human-in-the-loop AI systems deliver better results than pure automation attempts in insurance operations.

Fatal Flaw #3: Black Box Decisions in a Transparency-Required Industry

Perhaps most critically, generic AI solutions often operate as black boxes that can't explain their reasoning or provide the audit trails required for regulatory compliance. The NAIC Model Bulletin on AI Systems, adopted by 24+ states, specifically requires explainable AI systems with comprehensive documentation.

Generic AI systems that can't explain their decisions create significant legal and financial risk for insurers. They may produce technically correct results but lack the transparency needed for regulatory examinations, consumer protection compliance, or claims disputes.

This regulatory requirement isn't just about compliance—it's about building sustainable competitive advantages through AI systems that can be trusted, validated, and improved over time based on clear understanding of how they work.

Learning From Failure: Why the Right Architecture Prevents Most Problems

The Counterintuitive Truth About AI Success in Insurance

The Carrier Management analysis reveals a counterintuitive insight that challenges conventional thinking about AI implementation: "Here's the part few admit out loud: failure is the point." However, this only applies when using AI systems designed to learn from failure rather than systems that simply break when encountering unexpected situations.

"A strong pilot should surface risk, expose misalignment, and include fluent operators shadowing outputs to catch errors early," Maginley explains. "That discipline prevents flawed workflows from scaling into costly mistakes." This approach requires AI architecture specifically designed to handle failure gracefully and convert errors into learning opportunities.

Generic AI systems tend to fail catastrophically or produce incorrect results without warning. Insurance-specific AI architecture includes confidence scoring, error detection, and escalation mechanisms that prevent problematic decisions from impacting business operations while using those situations to improve future performance.

Understanding the common failure modes helps organizations avoid repeating the 7 reasons why insurance AI projects fail, including unrealistic automation expectations and inadequate stakeholder alignment.

Building Systems That Get Stronger Through Real-World Use

The most successful AI implementations in insurance share a common characteristic: they improve through real-world usage rather than degrading when faced with new situations. This requires architecture designed for continuous learning rather than static operation.

When insurance-specific AI encounters new document types, unusual risk scenarios, or changing regulatory requirements, the system's learning mechanisms adapt to handle these situations more effectively in the future. Generic AI systems typically require manual intervention and formal retraining to handle new scenarios.

This difference becomes apparent over time. Insurance-specific systems become more valuable as they process more submissions and learn from more edge cases, while generic systems require increasing maintenance to prevent performance degradation.

Real-world success stories, like how RGA accelerated underwriting innovation, demonstrate the compound value that comes from systems designed to learn and adapt in production environments.

What Production-Ready Insurance AI Actually Looks Like

Immediate Value with Continuous Improvement

Successful AI implementations in insurance deliver measurable results from Day 1 while continuously improving over time. This dual capability requires architecture specifically designed for insurance workflows rather than generic solutions adapted for insurance use.

Production-ready systems typically achieve 5X processing speed improvements immediately while maintaining or improving accuracy rates. More importantly, they handle the document variety and edge cases that characterize real insurance operations without requiring extensive customization or ongoing technical support.

The key is combining immediate efficiency gains with learning capabilities that compound value over time. Each submission processed improves the system's ability to handle similar cases more accurately and efficiently in the future.

Companies implementing these solutions report measurable improvements in quote turnaround times, higher quote-to-bind ratios, improved underwriter satisfaction, and reduced compliance risk—all visible from the initial deployment.

Integration That Enhances Rather Than Replaces Existing Expertise

The most successful implementations work within existing underwriting workflows rather than requiring wholesale process changes. They enhance underwriter capabilities with better data extraction, risk pattern recognition, and decision support while preserving the human expertise that drives profitable underwriting.

This enhancement approach differs fundamentally from replacement-oriented AI that tries to eliminate human decision-making. Insurance-specific AI systems are designed to amplify human expertise by handling routine processing tasks and providing intelligent insights that support better decision-making.

Companies using this approach report that their underwriters become more productive and more accurate rather than feeling threatened or displaced by AI technology. The result is improved job satisfaction alongside improved business outcomes.

Practical applications include intelligent document processing that handles any document format while maintaining human oversight, and submission triage solutions that help underwriters focus on high-value opportunities.

Regulatory Compliance Built Into Architecture

Production-ready insurance AI includes regulatory compliance capabilities as core features rather than add-on considerations. This includes comprehensive audit trails, decision explainability, confidence scoring, and documentation systems that support regulatory examinations.

These compliance capabilities aren't limitations—they're competitive advantages that enable insurers to adopt AI technology confidently while meeting regulatory requirements. Companies using compliant-by-design AI systems can scale their implementations faster and with less legal risk than those trying to retrofit compliance into generic solutions.

The regulatory framework continues to evolve, with state insurance commissioners increasingly requiring explainable AI systems. Insurance-specific AI architecture is designed to meet these requirements from the outset rather than requiring extensive modification as regulations tighten.

The Path Forward: Choosing Architecture Over Generic Solutions

The Critical Questions Every Insurance Leader Should Ask

Before investing in AI technology, insurance organizations should evaluate whether they're considering generic solutions adapted for insurance or systems designed specifically for insurance from the ground up:

- Does this AI understand insurance documents? Can it handle the variety of formats, the contextual information, and the industry-specific terminology that characterizes real submissions?

- Can it learn from our underwriters? Does the system improve based on expert feedback, or does it require formal retraining cycles that can't keep pace with market changes?

- Will it work with our existing processes? Does implementation require wholesale workflow changes, or does it enhance current operations with better data and insights?

- Can we explain its decisions to regulators? Does the system provide the transparency and documentation required for regulatory compliance, or does it create compliance risk?

- Does it deliver immediate value? Can we see measurable improvements from Day 1, or does it require extensive customization and training before delivering results?

These questions help distinguish between generic AI solutions with insurance marketing and purpose-built insurance AI systems designed for the industry's unique challenges.

Moving Beyond the 95% Failure Rate

The companies that avoid the 95% failure rate share a common approach: they choose AI systems designed specifically for insurance rather than trying to adapt generic solutions. This choice determines whether AI initiatives deliver sustainable competitive advantages or join the long list of failed pilots.

Success factors include starting with best practices for implementing insurance AI that emphasize stakeholder alignment, clear success metrics, and incremental deployment strategies that build confidence through demonstrated results.

As the Carrier Management analysis concludes, the best organizations don't ask whether AI worked, but rather answer "the sharper question: 'Is this worth scaling, and how do we know?'" For insurance companies, answering this question successfully requires AI architecture that matches industry complexity rather than generic solutions that work well in simpler environments.

The Bottom Line: Specialized Solutions for Specialized Industries

Insurance's 95% AI failure rate isn't primarily an implementation problem—it's a solution selection problem. Generic AI solutions that excel in controlled environments with standardized inputs fail catastrophically when faced with insurance's document variety, regulatory complexity, and risk assessment sophistication.

The 5% that succeed understand that insurance requires specialized AI architecture designed for the industry's unique challenges. These systems deliver immediate value while continuously improving, integrate with existing workflows while enhancing expertise, and provide regulatory compliance while enabling competitive advantage.

The insurance industry stands at a decision point. Organizations can continue trying to adapt generic AI solutions and join the 95% failure rate, or they can invest in specialized AI architecture designed for insurance's unique challenges. The companies that choose specialized solutions will build sustainable competitive advantages while others remain trapped in pilot purgatory.

The path beyond the 95% failure rate requires AI systems designed for insurance complexity from Day 1. Companies like SortSpoke demonstrate how insurance-specific AI architecture combining ML, LLMs, and human-in-the-loop validation can deliver production-ready results immediately while continuously improving through real-world use. Success isn't about better implementation of generic solutions—it's about choosing technology built for insurance's unique demands.