4 Ways to Staff Your Human-in-the-Loop AI Operation

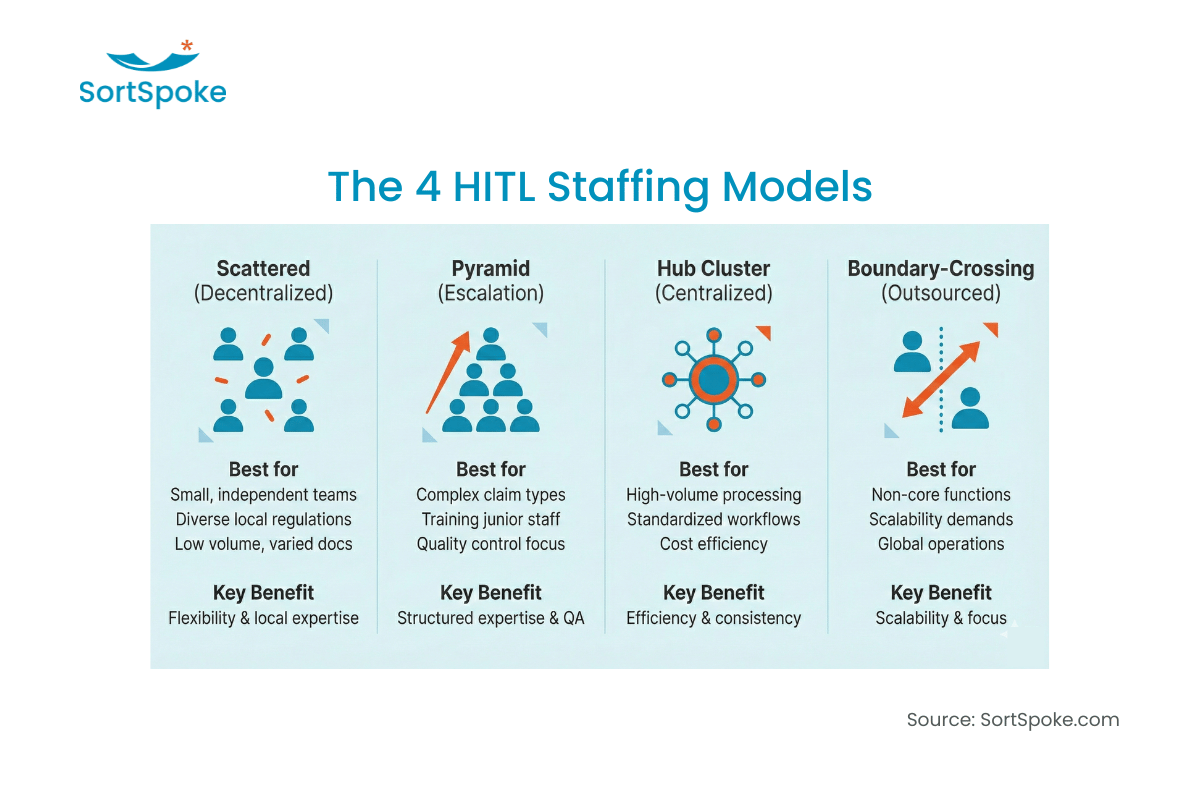

TL;DR

- Decentralized: Existing staff handles verification—low change management, quick to start, best for pilots and smaller teams

- Escalation: Junior staff + senior experts in tiers—preserves expert time, good for complex product lines

- Centralized: Dedicated HITL team—consistent quality and deep expertise, best for high-volume operations (1,000+ documents daily)

- Outsourced: Third-party handles verification—flexible capacity and faster scaling, good for variable volumes

The conversation has shifted.

A year ago, insurance leaders were asking "Should we trust AI with underwriting work?" Now they're asking a different question: "We've committed to human-in-the-loop AI—who handles the human part?"

If you've followed the research, you know why the best insurance AI keeps humans in the driver's seat: it's the difference between 16% trust and 60% trust from underwriting teams. The 4x trust multiplier isn't a nice-to-have; it's the difference between a pilot that scales and one that dies.

But understanding why HITL works doesn't answer the practical question: how do you actually organize it?

There's no single right answer. The best staffing model depends on your organization's size, volume, expertise distribution, and strategic goals. Here are four approaches we've seen work—and when each one makes sense.

Model 1: Decentralized (Existing Staff)

What it is: Your current underwriters and processors handle AI verification as part of their normal workflow. The AI processes documents, flags exceptions, and your existing team reviews and corrects as needed.

Best for: Pilots, smaller teams, distributed operations

This is where most organizations start—and for good reason. You're not asking anyone to change their job or learn a new role. You're simply adding a tool that handles the routine work while your people focus on the decisions that require judgment.

The advantages:

- Low change management. No new teams to build, no organizational restructuring. People keep doing their jobs, just with better tools.

- Leverages existing expertise. Your underwriters already know the business. They can catch AI errors that someone outside the domain would miss.

- Quick to implement. You can start within weeks, not months.

The limitations:

- Inconsistent quality. Different reviewers may apply different standards. One underwriter might accept what another would correct.

- No specialization. Your team doesn't develop deep expertise in AI verification—it's just one more thing on their plate.

- Scattered feedback. AI improvements happen slower because corrections come from many sources without centralized patterns.

When to use it: This model works well for pilots, for organizations with fewer than 50 underwriters, or for geographically distributed teams where centralization isn't practical. It's also the right starting point when you're not yet sure how much volume you'll need to process.

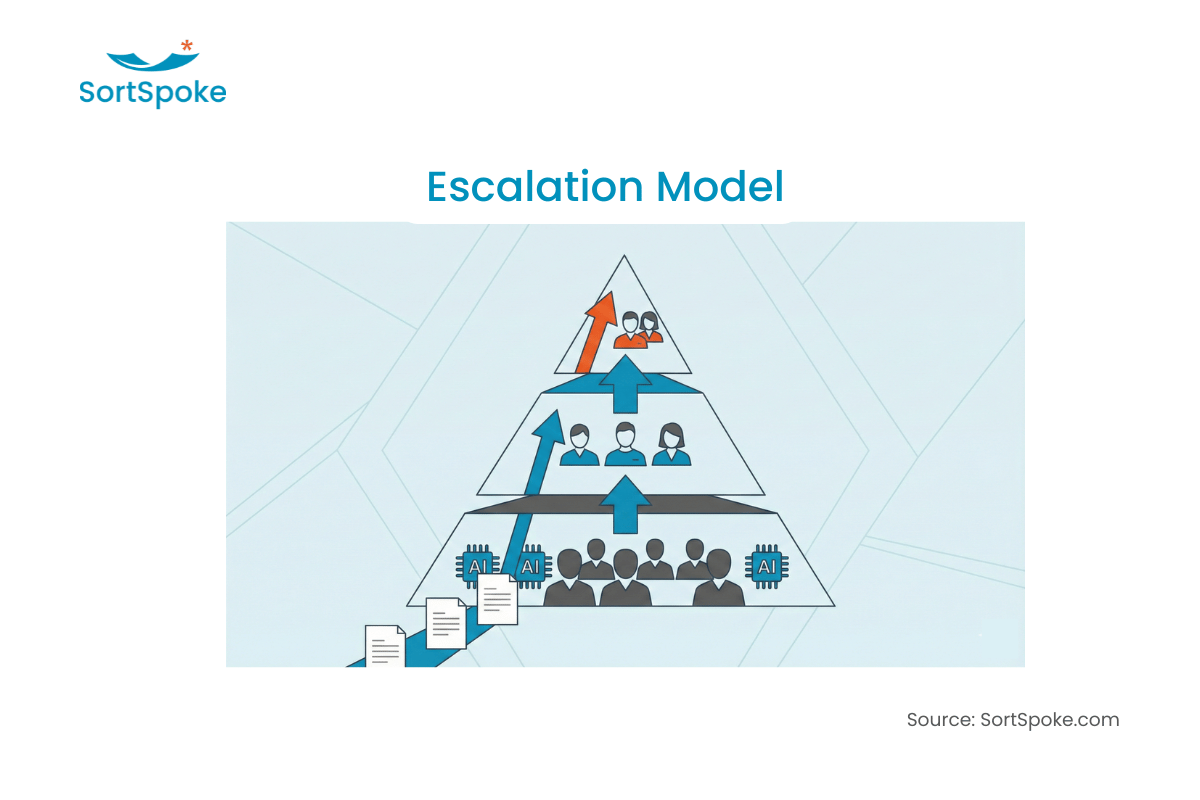

Model 2: Escalation to Senior/SMEs

What it is: A tiered approach. Routine submissions are handled by AI with junior staff providing basic verification. Complex cases, edge cases, and exceptions escalate to senior underwriters or subject matter experts for final review.

Best for: Organizations with clear expertise hierarchies, complex product lines

This model recognizes that not all human verification is equal. A data entry correction doesn't require the same expertise as evaluating a borderline risk decision. By tiering the work, you preserve your most experienced people's time for the decisions that actually need it.

The advantages:

- Preserves expert time. Senior underwriters focus on complex decisions instead of routine verification.

- Natural knowledge transfer. Junior staff learn from seeing what gets escalated and why. Over time, they need to escalate less.

- Matches how teams already work. Most underwriting operations already have some form of escalation structure.

The limitations:

- Bottlenecks at the top. If escalation criteria are too broad, senior staff get overwhelmed. If too narrow, quality suffers.

- Requires clear escalation rules. Someone has to define what gets escalated and what doesn't—and those rules need constant refinement.

- Can create junior frustration. If junior staff are only doing routine verification, they may not develop the judgment needed to advance.

When to use it: This model works well when expertise is concentrated in a small senior team, when you have complex or specialty lines that require deep domain knowledge, or when you're trying to preserve and transfer institutional knowledge as senior staff approach retirement.

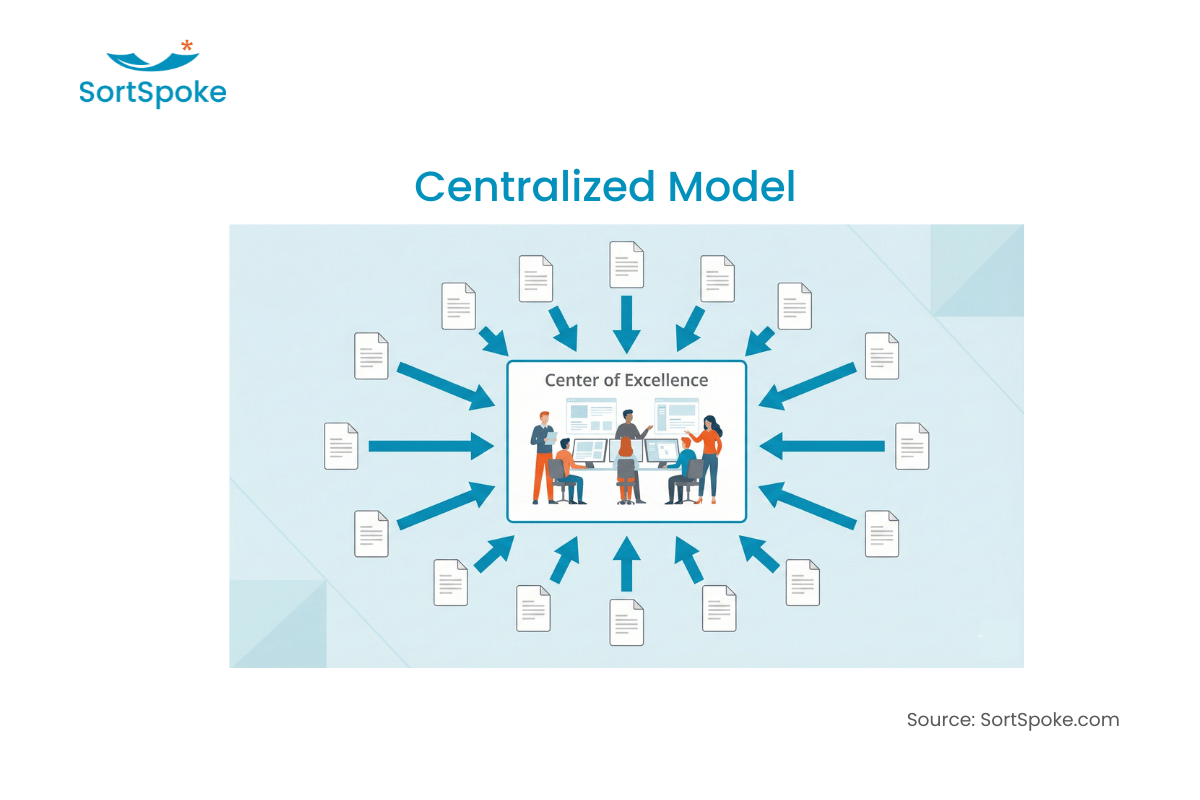

Model 3: Centralized HITL Team

What it is: A dedicated team handles all human-in-the-loop verification across the organization. This team specializes in AI verification, develops deep expertise in the patterns and edge cases, and becomes the center of excellence for HITL operations.

Best for: High-volume operations, organizations requiring consistent quality, companies with multiple AI use cases

When you're processing thousands of submissions, loss runs, or claims documents daily, a decentralized approach breaks down. A centralized team provides the consistency and specialization that high-volume operations require.

The advantages:

- Consistent quality. Same standards applied across all work. Easier to calibrate and maintain quality.

- Deep expertise. Team members develop specialized skills in AI verification that generalists never would.

- Measurable and optimizable. Centralized operations are easier to measure, benchmark, and improve.

- Cross-training opportunities. Team can handle peaks in different document types by shifting resources.

The limitations:

- Higher upfront investment. You need to hire or redeploy staff, define roles, build processes.

- Organizational change required. Work that used to happen in business units now moves to a shared service.

- Risk of disconnection. Centralized teams can lose touch with the business context if not managed carefully.

When to use it: This model makes sense when you're processing high volumes (1,000+ documents daily), when you need consistent quality across multiple product lines, or when you're deploying AI across multiple use cases (submissions, loss runs, claims) and want a single team to handle all verification.

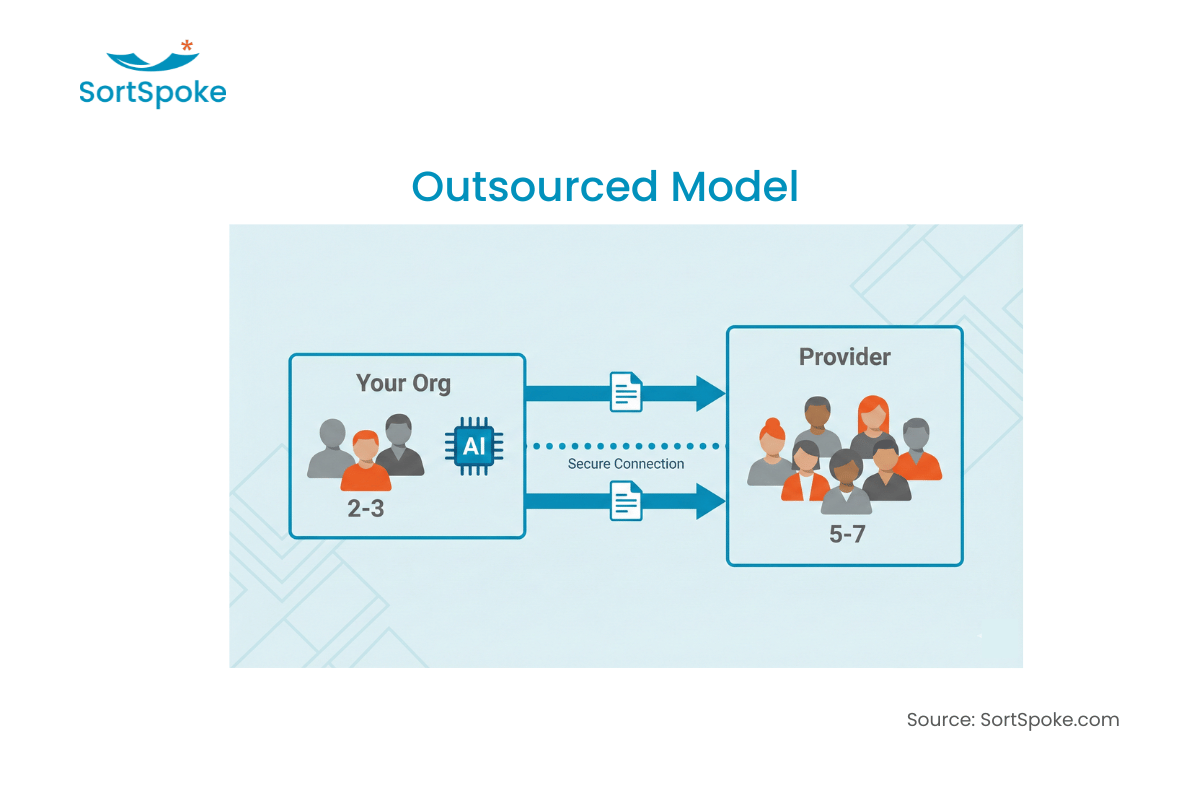

Model 4: Outsourced HITL

What it is: A third-party provider handles the human verification layer. You send documents through AI processing, and the outsourced team handles review, correction, and exception handling.

Best for: Rapid scaling, variable volumes, cost optimization

Outsourcing isn't right for every organization, but it solves specific problems that internal models struggle with: sudden volume spikes, cost pressure, and the difficulty of building specialized teams quickly.

The advantages:

- Flexible capacity. Scale up for peak seasons, scale down when volume drops.

- Lower fixed costs. Pay for verification when you need it, not year-round.

- Faster implementation. Skip the hiring and training—the provider already has trained staff.

The limitations:

- Less institutional knowledge capture. Corrections and learnings may not flow back to your organization as effectively.

- Quality control challenges. You're dependent on the provider's standards and training.

- Security considerations. Sensitive data leaving your organization requires additional controls.

When to use it: This model works well for handling overflow capacity during peak seasons, for organizations with highly variable volumes, or for companies that want to test HITL at scale before committing to building internal capabilities.

Choosing Your HITL Model (Or Combining Them)

Here's the reality: most successful organizations don't pick one model and stick with it forever. They evolve.

Three questions to guide your decision:

- Where are you on the adoption curve? Early pilots favor decentralized. At-scale operations favor centralized.

- Where is your expertise concentrated? If you have a few senior experts and many junior staff, escalation models work well. If expertise is distributed, decentralized may be fine.

- How variable is your volume? Steady, high volume favors internal centralized teams. Highly variable volume may benefit from outsourced capacity.

Hybrid approaches are common. As McKinsey's 2025 workplace research notes, the AI era "shifts the focus of human intelligence from execution to orchestration and judgment"—and companies are increasingly hiring dedicated "human-in-the-loop validators" to fill this role.

We see organizations use decentralized models for straightforward document types (ACORD forms) while maintaining centralized teams for complex documents (SOVs, loss runs). Or they handle steady-state volume internally while outsourcing overflow during peak seasons.

The evolution path: Most organizations follow a similar trajectory:

- Start decentralized during pilots

- Move to escalation as they identify patterns

- Build centralized teams as volume grows

- Add outsourced capacity for flexibility

The key is designing for evolution from the start. Your Day 1 staffing model shouldn't lock you into a structure that doesn't work at scale. (Before you commit, run through these 9 questions to ask any underwriting AI vendor.)